Word Embedding: Representing Words as Numbers for AI

Have you ever wondered how computers understand language? It’s a fascinating journey that starts with a seemingly simple yet profound concept: word embedding. Imagine being able to capture the essence of words, their meanings, and relationships, all in a bunch of numbers. That’s exactly what word embedding does! It’s like giving computers a secret decoder ring for human language, allowing them to grasp the nuances and complexities of our words in a way they can process. This revolutionary technique has become the backbone of many artificial intelligence (AI) applications, from chatbots to language translation services. In this blog post, we’ll dive deep into the world of word embedding, exploring how it works, why it’s so important, and the incredible ways it’s shaping the future of AI and natural language processing (NLP).

The Building Blocks: What Exactly is Word Embedding?

Defining Word Embedding

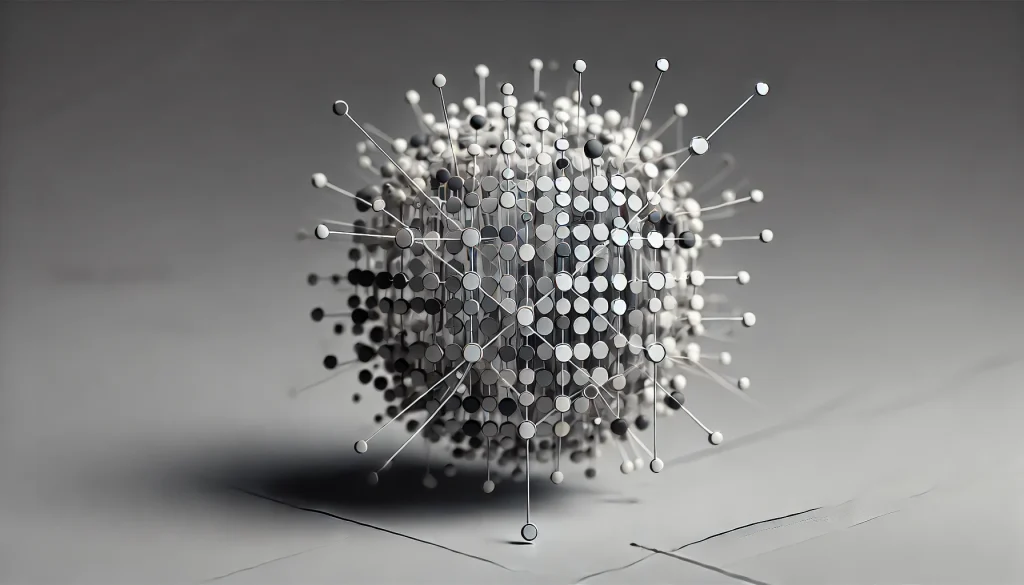

At its core, word embedding is a technique that represents words as vectors of real numbers. But what does that really mean? Let’s break it down. Imagine each word in a language as a unique point in a vast, multidimensional space. The coordinates of this point are a series of numbers, and these numbers together form the word’s “embedding.” This numerical representation captures various aspects of the word’s meaning, usage, and relationships with other words. It’s like creating a DNA sequence for each word, where each number in the sequence tells us something about the word’s characteristics. This approach allows computers to perform mathematical operations on words, measuring similarities, identifying patterns, and even making predictions about language use.

From Words to Vectors: The Magic Transformation

So how do we go from plain text to these numerical vectors? The process involves sophisticated algorithms and large datasets. These algorithms analyze vast amounts of text, looking at how words are used in context, their frequency, and their relationships with other words. They then distill this information into a set of numbers for each word. For example, the word “king” might be represented as [0.50, -0.23, 0.65, …], while “queen” could be [0.48, -0.21, 0.67, …]. The similarity in these vectors would reflect the related meanings of these words. This transformation allows computers to perform complex linguistic tasks with remarkable accuracy, as they can now “understand” words in a way that’s mathematically precise and computationally efficient.

The Evolution of Word Representation in AI

From One-Hot Encoding to Distributed Representation

The journey of representing words for AI didn’t start with word embeddings. Initially, a simpler method called one-hot encoding was used. Imagine a giant spreadsheet where each row represents a word, and each column represents a unique word in the vocabulary. For each word, you’d put a 1 in its corresponding column and 0s everywhere else. This method, while straightforward, had significant limitations. It treated all words as equally different from each other, failing to capture any semantic relationships. For instance, “cat” and “kitten” would be as different as “cat” and “skyscraper” in this representation. Enter distributed representation, the concept behind modern word embeddings. This approach represents words as dense vectors where the meaning is distributed across all dimensions. It’s like describing a word not by a single attribute, but by a combination of many subtle features, allowing for a much richer and more nuanced representation of language.

The Breakthrough: Word2Vec and Beyond

The real game-changer in word embedding came with the introduction of Word2Vec by Google researchers in 2013. This model revolutionized the field by creating word embeddings that captured semantic and syntactic relationships between words with astonishing accuracy. Suddenly, AI could understand that “king” is to “man” as “queen” is to “woman,” or that “Paris” is to “France” as “Berlin” is to “Germany.” This breakthrough opened up a world of possibilities in natural language processing. Since then, numerous other models have emerged, each bringing its own improvements and specializations. From GloVe (Global Vectors for Word Representation) to FastText and beyond, these models have continually pushed the boundaries of what’s possible in AI’s understanding of language.

The Magic Behind the Numbers: How Word Embedding Works

Context is King: The Distributional Hypothesis

At the heart of word embedding lies a simple yet powerful idea known as the distributional hypothesis. This hypothesis suggests that words that appear in similar contexts tend to have similar meanings. It’s the linguistic equivalent of “you are the company you keep.” For example, the words “dog” and “cat” often appear in similar contexts – they’re both pets, they’re mentioned in sentences about animals, homes, and care. This contextual similarity is what word embedding algorithms capture. They analyze vast corpora of text, looking at the words that frequently appear around each target word. By doing so, they can create a numerical representation that reflects these contextual relationships, effectively mapping out the semantic landscape of language.

Training the Model: Neural Networks and Prediction Tasks

So how do we actually create these embeddings? The process typically involves training a neural network on a specific task. One common approach is the skip-gram model, where the network tries to predict the context words given a target word. For instance, given the word “dog,” the model might try to predict words like “bark,” “pet,” or “walk” that often appear nearby. Another approach is the continuous bag-of-words (CBOW) model, which does the opposite – it tries to predict a target word given its context. Through this training process, the network learns to represent words as vectors in such a way that words with similar contexts end up close to each other in the vector space. It’s like the network is learning to organize words into a massive, multidimensional map based on their usage and meaning.

The Power of Word Embedding: Applications and Impact

Revolutionizing Natural Language Processing

Word embedding has been nothing short of revolutionary for natural language processing. By providing a way to represent words that captures their meaning and relationships, it has dramatically improved the performance of various NLP tasks. Take machine translation, for example. Word embeddings allow translation systems to understand not just word-for-word correspondences, but the deeper semantic relationships between words in different languages. This leads to more natural, context-aware translations. Similarly, in sentiment analysis, word embeddings help systems understand the nuanced meanings of words in context, leading to more accurate assessments of the sentiment expressed in texts. From chatbots to voice assistants, the ability to understand and generate human-like language has taken a quantum leap forward thanks to word embedding techniques.

Beyond Language: Cross-Domain Applications

The impact of word embedding extends far beyond just language processing. These techniques have found applications in various fields where understanding relationships between entities is crucial. In recommendation systems, for example, similar techniques can be used to represent products or user preferences, allowing for more accurate and personalized recommendations. In bioinformatics, researchers have applied embedding techniques to represent genetic sequences, helping to uncover complex relationships in biological data. Even in fields like finance, embeddings have been used to represent market trends and financial instruments, aiding in predictive modeling and risk assessment. The ability to capture complex relationships in a format that computers can easily process has opened up new possibilities across a wide range of domains.

Challenges and Limitations: The Road Ahead

Bias in Language Models: A Reflection of Society

While word embeddings have brought remarkable advancements, they also come with their own set of challenges. One of the most significant issues is the problem of bias in language models. Since these models learn from real-world text data, they can inadvertently capture and amplify societal biases present in that data. For example, word embeddings trained on historical texts might associate certain professions more strongly with one gender over another, reflecting historical gender disparities rather than present-day realities. This can lead to AI systems that perpetuate or even exacerbate existing biases. Researchers and developers are actively working on methods to detect and mitigate these biases, but it remains an ongoing challenge in the field. It’s a stark reminder that AI systems, no matter how advanced, are not immune to the imperfections of the data they’re trained on.

The Contextual Conundrum: Dealing with Polysemy

Another limitation of traditional word embedding models is their struggle with polysemy – words that have multiple meanings depending on context. For instance, the word “bank” could refer to a financial institution or the edge of a river. Standard word embeddings assign a single vector to each word, which can’t capture these contextual differences. This limitation has led to the development of more advanced models, such as BERT (Bidirectional Encoder Representations from Transformers), which generate different embeddings for words based on their context in a sentence. These contextual embedding models represent a significant step forward, but they also come with increased computational complexity and their own set of challenges. As the field continues to evolve, finding ways to efficiently capture the full richness and ambiguity of language remains a key area of research.

The Future of Word Embedding: What’s Next?

Multilingual and Cross-Lingual Embeddings

As our world becomes increasingly interconnected, the ability to process and understand multiple languages becomes ever more crucial. This has led to exciting developments in multilingual and cross-lingual word embeddings. These models aim to create a shared semantic space for multiple languages, allowing for improved machine translation, cross-lingual information retrieval, and even zero-shot learning where a model trained on one language can perform tasks in another. Imagine a world where language barriers in technology are a thing of the past, where AI can seamlessly understand and translate between any languages it encounters. This is the promise of multilingual embeddings, and it’s an area of intense research and rapid progress. As these models continue to improve, they have the potential to revolutionize global communication and information access.

Beyond Words: Sentence and Document Embeddings

While word embeddings have proven incredibly useful, language is more than just individual words. The next frontier in this field is effectively representing larger units of text – sentences, paragraphs, and entire documents. Researchers are developing techniques to create embeddings for these larger text units, capturing not just the meaning of individual words, but the overall context and meaning of longer pieces of text. These higher-level embeddings could enable more sophisticated text analysis, improved document classification, and more natural language understanding in AI systems. Imagine an AI that can truly understand the nuances of a complex argument or the subtle implications in a piece of literature. As we move towards these more comprehensive text representations, we’re inching closer to AI systems that can engage with language at a level that rivals human understanding.

Conclusion

As we’ve explored in this journey through the world of word embedding, we’re witnessing a fundamental shift in how machines understand and process language. From the basic idea of representing words as numbers to the complex models that can capture the nuances of meaning and context, word embedding has become a cornerstone of modern AI and NLP. It’s enabling machines to engage with human language in ways that were once the realm of science fiction – translating between languages, understanding sentiment, answering questions, and even generating human-like text. But this is just the beginning. As researchers continue to refine these techniques, addressing challenges like bias and contextual understanding, and pushing into new frontiers like multilingual and document-level embeddings, we’re moving towards a future where the barrier between human and machine communication becomes increasingly blurred.

The implications of these advancements are profound and far-reaching. They promise to make technology more accessible, breaking down language barriers and opening up new possibilities for global communication and understanding. They’re enabling more natural and intuitive interactions with AI systems, from virtual assistants to automated customer service. And they’re providing powerful tools for analyzing and deriving insights from the vast amounts of textual data generated in our digital world. As we look to the future, it’s clear that word embedding and its evolving offshoots will continue to play a crucial role in shaping the landscape of AI and our interaction with technology. It’s an exciting time to be at the intersection of language and technology, and the journey is far from over. The revolution in AI’s understanding of language is ongoing, and word embedding is at its heart, continually pushing the boundaries of what’s possible in the realm of artificial intelligence.

Disclaimer: This blog post provides an overview of word embedding techniques based on current understanding and research. As this is a rapidly evolving field, some information may become outdated over time. While we strive for accuracy, we encourage readers to consult the latest research and authoritative sources for the most up-to-date information. If you notice any inaccuracies, please report them so we can correct them promptly.